To obtain the approximate state of the solar corona, we integrate the model towards a steady-state solution. Numerous medium-resolution (204 x 148 x 315) simulations were performed to refine parameter choices (most notably those for the wave-turbulence-driven heating model). Each of these simulations relaxed the corona for ~ 80 hours, and took approximately 15–30 wall-clock hours to run (depending on the number of cores used).

The preliminary prediction was performed using a mesh with

295 x 315 x 699 points (65 million cells),

relaxed for 68 hours

(requiring 134,000 time steps). The average time step was 1.8 seconds. It was run on 210 Pleiades IvyBridge nodes (4,200 processors),

and took 60 wall-clock hours to complete.

The size and scope of the simulations requires the use of HPC resources. We have been granted allocations on the following HPC systems and have utilized each in running the simulations. We are very grateful for the assistance provided to us by the dedicated staff at NAS, TACC, and SDSC. Our prediction would not have been possible without these resources.

Pleiades is a massively parallel supercomputer at NASA's Advanced Supercomputing Division (NAS). In June 2017 it was ranked as the 15th fastest supercomputer in the world. It is an SGI machine linked by InfiniBand set in a partial hypercube topology. There are a variety of processor types of the compute nodes including Intel Xeon E5-2670 2.6GHz SandyBridge (16-core), Xeon E5-2680v2 2.8GHz Ivy Bridge (20-core), Xeon E5-2680v3 2.5GHz Haswell (24-core), and Xeon E5-2680v4 2.4GHz Broadwell (28-core).

The MAS code displays essentially linear scaling on all processor types up to ~ 5,000 cores.

Our allocation was provided by NASA's Advanced Supercomputing Division.

Comet, is a massively parallel supercomputer at the San Diego Super Computing Center (SDSC). It is a Dell machine consisting of Xeon E5-2680v3 2.5GHz Haswell (24-core) compute nodes linked by Infiniband. Presented as "HPC for the 99 percent", Comet is designed for codes that run best at ~ 1,000 cores in under 2 days (such as our medium simulations described above).

The MAS code displays good scaling up to the maximum 72 nodes (1,796 cores) available for a single run.

Our allocation was provided by the Extreme Science and Engineering Discovery Environment (XSEDE), which is supported by National Science Foundation grant number ACI-1548562.

Stampede2, is a massively parallel supercomputer at the Texas Advanced Computing Center (TACC). It is a Dell machine currently consisting of Intel Knights Landing nodes (each with 68 cores and 272 threads). These new processors have expanded vectorization capabilities using the AVX-512 instruction set, and in addition to standard DDR4 SDRAM, have ultra-fast (4x) MCDRAM that can be used as a large high-speed cache. In June 2017, Stampede2 was ranked as the 12th fastest supercomputer in the world.

The MAS code displays good scaling on Stampede2 up to 64 nodes (4,352 cores).

Our allocation was provided by the Extreme Science and Engineering Discovery Environment (XSEDE), which is supported by National Science Foundation grant number ACI-1548562.

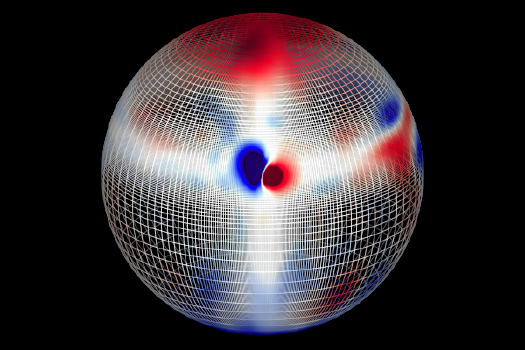

The Magnetohydrodynamic Algorithm outside a Sphere (MAS) code is written in FORTRAN and parallelized using the Message Passing Interface (MPI). It computes the equations of our MHD model using finite difference on a logically rectangular non-uniform spherical grid. The non-uniformity of the grid allows MAS to efficiently resolve small-scale structures such as the transition region and active regions, while allowing for coarser grid points over the global scale. Each component of the field vectors are staggered which, combined with the use of the vector potential as a primitive, guarantees that the magnetic field is divergence-free. Advective terms are spatially differenced using first-order upwinding, while parabolic and gradient terms are centrally differenced.

The code uses an adaptive time-step that conforms to the plasma flow CFL condition. As this is often a much slower time-scale than the magnetosonic waves, a semi-implicit term is added to the MHD equations that stabilizes the algorithm for time-steps larger than the fast magneto-sonic wave limit.

The temporal differencing uses a predictor-corrector scheme for the advective and semi-implicit operator, where any reactive terms are included in the corrector step. The parabolic terms are operator split. The WKB approximate Alfven wave pressure advance as well as the wave turbulence advance are sub-cycled at their explicit advective CFL conditions.

All parabolic operators and the semi-implicit solves are computed by applying backward-Euler, and solving the resulting system with a preconditioned conjugate gradient iterative solver. We use a non-overlapping domain decomposition with zero-fill incomplete LU factorization for the preconditioner. The operator matrices are stored in a diagonal-DIA sparse storage format for internal grid points, while the boundary conditions are applied matrix-free. The preconditioner matrix is stored in a compressed sparse row (CSR) storage format optimized for vectorized memory access.

We set as a boundary condition the radial component of the magnetic field at the base of the corona. This field is deduced from the HMI magnetograph aboard the NASA’s SDO spacecraft, which measure the line-of-sight component of the photospheric magnetic field from space.